Ask anyone about the quality of their data and you’re likely to get a wide range of answers from people. The interesting thing about data quality is that it is so bound up in context that we often lose site of just how much data we have out there, where it specifically is and how important it may or may not be.

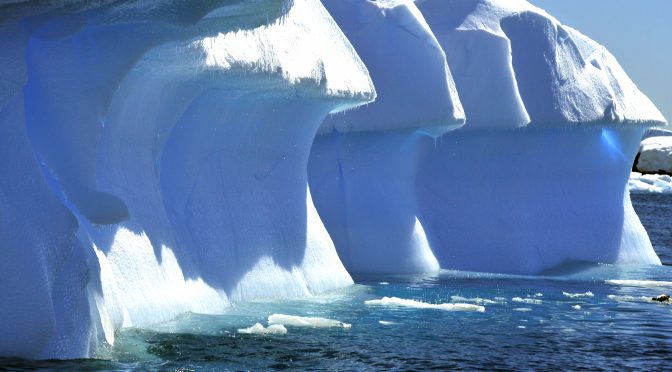

The concept of the ‘iceberg of ignorance’ was first published in 1989 by consultant Sidney Yoshida. Yoshida published his study results at a time when Western organisations were becoming aware that Japanese companies were producing better quality products at lower prices. Although Yoshida’s study involved numerous mid-sized organizations, generally speaking , the same findings tend to be present in all sizes of organizations.

The study led to the popular idea that front line workers knew all of the problems, supervisors were aware of almost three quarters, middle managers were aware of just less than a tenth and senior executives were only aware of 4% of the problems going on in the business. Since then the concept has been loosely applied to general management bashing but of course one of the flaws in the theory is that although this could be true for what happens on the production floor of manufacturing, it doesn’t tell the full story of all the other issues that a business might have and issues which may actually be obscured from front line personnel.

I like the results of the study on face value so let’s consider how it might apply in the context of data quality.

common sense tells us this could be true

It follows, that as a business leader, if you are not intimately involved in the use of particular data on a day to day basis then you won’t know much about its quality.

The further away from the data that you are, the less you know about it, the challenges with collecting and maintaining it.

Transactions with business partners rely on good data to be effectively executed. Bad master or reference data leads to bad transactions and these have a compounding effect which in turn carries an operational cost. You can hardly be expected to understand whether or not your data is good or bad if you have no insights into it either.

Unless there are wild variances that cannot be explained easily, generally the leaders of the business accept and believe, that all necessary controls are in place but also accept that there is the ever present risk of bad data creeping in.

While Yoshida’s revelations are shocking to many, they are really hardly be surprising. What is more important is the fact that if the leaders of an organization are barely aware that they have a data problem – will they bother to invest in mechanisms to improve data quality? Those in the trenches, at the coal-face and at the front lines of transaction processing and data management have a responsibility to keep the “higher ups” informed – if they don’t, they’re not doing themselves any favours.

The issue is made more complex by virtue of the fact that many businesses are still struggling to understand what their data is actually worth – so even if you accept and understand that you have a data quality issue – are you reporting on it and is it considered important enough to do something about?

Are the implications of bad data fully understood? Looking to how this situation can be addressed, there are several of elements to keep in mind.

The first issue is reporting on data quality in general terms.

Irrespective of whether your business engages in active or passive data governance, having an approach to the overall improvement of data quality is key to data quality improvement. Data that is not maintained regularly will go stale and deteriorate even if it was 100% correct at the time of initial capture.

Another element is routine and regular analysis and profiling of your data. Profiling is the act of analysing the contents of your data. Not all data needs to be profiled, though almost all data could be profiled.

audit and data quality

Data that has a financial impact on the reporting of the status of the business to shareholders and investors is generally governed by audit and compliance – this takes into account vendor and customer data attributes and some financially impacting transactional processing but it looks for duplicates, anomalies, exceptions and events that seem to deviate from the norm – outliers if you will.

Audit will typically look to the process of data collection, creation and maintenance and assess whether all the checks and balances are in place and all the necessary approvals of oversight are being provided but it doesn’t typically look to the data in aggregate – normally just samples of data.

data profiling for insights

The other side of data profiling and analysis often focuses on the master data and reference data elements and looks at the aggregate. This type of data profiling will look for duplicates and completeness issues but with a focus on the overall objectives of the data for the business and not to try and counteract or identify fraudulent or deliberate attempts to introduce or create confusion in the data.

Regular reporting on how business teams and data quality improvement initiatives are driving toward better quality data, are important for giving the management board insights into the quality of the data and making sure that it stays as a general focus.

Your business should aim to report this periodically based on some key performance indicators around duplicate identification and completeness as well as identifying and tracking the before and after state and the implications for correction and remediation in transaction processing.

It is only possible to elevate the importance of data quality and of course funding for deeper and more comprehensive initiatives if you have empirical evidence of problems and goals that you’re trying to achieve.

Finally, remember that if you’re below the waterline and continue to keep all the data quality issues that you have, unreported and obscured from the visibility of management, it becomes really hard to get executive “buy in” for data quality improvement initiatives.

If you’re part of the community above the waterline, you owe it to yourself to ask for evidence that the business data is in good shape and improving in quality.

Photo by Luis Bartolomé Marcos